快速部署

说明

1, 本节采用容器化方式部署各个组件,用于快速验证产品/开发测试等。

2, 生产环境如果采用容器化部署,建议使用K8S等平台管理运维。

3, 快速部署使用了一台服务器,机器规格如下

CPU: 8核

内存:32G

存储:50G

4, nADX项目共使用3个二级域名,请根据实际情况解析或者设置hosts.

adx.abc.com #前端页面访问地址

adxservice.abc.com #管理端程序/api地址

exchange.abc.com #ADX exchange服务地址容器设置

创建nADX容器网络

docker network create adx-net说明

通过创建专门网络,使本机容器间可以使用容器名字通信。

创建工作目录

mkdir -p /root/workspace && cd /root/workspace创建NFS服务

用于nADX子项目Druid共享文件使用

创建配置文件

新建文件/root/workspace/nfs/exports.txt

mkdir -p /root/workspace/nfs && cd /root/workspace/nfs

cat > /root/workspace/nfs/exports.txt <<EOF

/druid-nfs *(rw,sync,subtree_check,no_root_squash,fsid=0)

EOF运行NFS服务

modprobe nfsd

docker run -itd \

--network adx-net \

--name test-adx-nfs \

-v /data/druid/druid-nfs:/druid-nfs \

-v /root/workspace/nfs/exports.txt:/etc/exports:ro \

--privileged \

-p 2049:2049 \

reg.iferox.com/library/erichough/nfs-server修改host记录

如果已有dns解析,则无需操作此步骤

==注意将下面例子中“172.16.20.10”替换为Docker服务器IP==

修改Docker机器host文件

文件位置:

Linux: /etc/hosts# 添加如下内容:

172.16.20.10 adx.abc.com

172.16.20.10 adxservice.abc.com

172.16.20.10 exchange.abc.com

# adx.abc.com 前端页面访问地址

# adxservice.abc.com 管理端程序/api地址

# exchange.abc.com ADX exchange服务地址修改本机host文件

文件位置:

Windows: C:\Windows\System32\drivers\etc\hosts

Linux: /etc/hosts

# 添加如下内容:

172.16.20.10 adx.abc.com

172.16.20.10 adxservice.abc.com

172.16.20.10 exchange.abc.com新建hosts文件

用于启动服务时候挂载到容器内部

mkdir -p /root/workspace/ && cd /root/workspace/

cat > /root/workspace/hosts <<EOF

172.16.20.10 adx.abc.com

172.16.20.10 adxservice.abc.com

172.16.20.10 exchange.abc.com

EOF部署MySQL

拉取镜像

docker pull reg.iferox.com/library/mysql:5.7创建配置文件

mkdir -p /root/workspace/mysql && cd /root/workspace/mysql

cat > /root/workspace/mysql/my.cnf <<EOF

[mysqld]

skip_ssl

max-connect-errors=100000

max-connections=2000

group_concat_max_len=102400

group_concat_max_len=102400

max_connections = 2000

server-id=1

expire_logs_days = 5

log-bin=mysql-bin1

datadir=/var/lib/mysql/

socket=/var/lib/mysql/mysql.sock

character-set-server=utf8mb4

collation_server=utf8mb4_unicode_ci

binlog-ignore-db=mysql

slave-skip-errors=all

sync-binlog=1

long_query_time=1

slow_query_log=1

slow_query_log_file=/var/log/mysql/mysql-slow.log

max_allowed_packet=67108864

symbolic-links=0

sql_mode=NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES

[mysqld_safe]

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

[client]

port = 3306

EOF运行MySQL容器

docker run -itd \

-p 3306:3306 \

-e MYSQL_ROOT_PASSWORD='adx@Pass123!' \

-v /root/workspace/mysql/my.cnf:/etc/mysql/my.cnf \

-v /data/dockerdata/mysql:/var/lib/mysql \

--network adx-net \

--name test-adx-mysql \

reg.iferox.com/library/mysql:5.7 数据库设置

连接DB:

mysql -uroot -p'adx@Pass123!' -h 127.0.0.1CREATE DATABASE nadx /*!40100 DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci */;

CREATE DATABASE druid /*!40100 DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci */;

grant all privileges on nadx.* to 'adxuser'@'%' identified by 'adx@Pass123!';

grant all privileges on druid.* to 'adxuser'@'%' identified by 'adx@Pass123!';

use nadx;运行adx初始化脚本

导入schema(nadx_1.5.1.sql)

下载全量SQL文件

cd /root/workspace/mysql

wget http://adx-doc.leadswork.net/files/sql/nadx_1.5.1.sql导入全量SQL文件

mysql -uroot -p'adx@Pass123!' -h 127.0.0.1 nadx < nadx_1.5.1.sql导入初始化数据(nadx_init_data.sql )

下载初始化数据SQL文件

cd /root/workspace/mysql

wget http://adx-doc.cusper.io/files/sql/nadx_init_data.sql导入初始化数据SQL文件

mysql -uroot -p'adx@Pass123!' -h 127.0.0.1 nadx < nadx_init_data.sql部署Redis

创建配置文件

在本地创建redis配置文件

mkdir -p /root/workspace/redis && cd /root/workspace/redis

cat > redis.conf <<EOF

#daemonize yes

protected-mode no

#pidfile "/var/run/redis9379.pid"

port 6379

timeout 0

loglevel warning

logfile "/data/redis/redis.log"

databases 1600

save 3600 100

dbfilename "dump.rdb"

dir "/data/redis"

appendonly no

appendfsync everysec

requirepass "redis-user:1234"

EOF拉取镜像

docker pull reg.iferox.com/library/redis:6.2.10启动镜像

启动Exchange Cache

docker run -tid \

-v /data/dockerdata/redis-exchange-cache:/data/redis/ \

-v /root/workspace/redis/redis.conf:/home/redis/redis.conf \

--network adx-net \

--name test-adx-redis-exchange \

reg.iferox.com/library/redis:6.2.10 /home/redis/redis.conf启动Data Cache

docker run -tid \

-v /data/dockerdata/redis-data-cache:/data/redis/ \

-v /root/workspace/redis/redis.conf:/home/redis/redis.conf \

--network adx-net \

--name test-adx-redis-data\

reg.iferox.com/library/redis:6.2.10 /home/redis/redis.confRedis服务器连接信息

exchange-cache:

服务器: test-adx-redis-exchange:6379

密码: redis-user:1234

data-cache:

服务器: test-adx-redis-data:6379

密码: redis-user:1234

连接命令:

docker exec -it test-adx-redis-exchange redis-cli -a redis-user:1234部署Zookeeper

拉取镜像

docker pull reg.iferox.com/library/cusper-zookeeper-3.5.10启动镜像

docker run -itd \

--network adx-net \

--name test-adx-zookeeper \

-v /data/dockerdata/zookeeper:/data/zookeeper \

reg.iferox.com/library/cusper-zookeeper-3.5.10部署Kafka

拉取镜像

docker pull reg.iferox.com/library/cusper-kafka:2.11-1.1.1创建配置文件

在本地创建kafka配置文件

注意修改"zookeeper.connect"配置项

mkdir -p /root/workspace/kafka && cd /root/workspace/kafka

cat > server.properties <<EOF

broker.id=1

port=9092

num.network.threads=6

num.io.threads=32

log.flush.interval.ms=1000

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=220857600

log.dirs=/data/kafka

num.partitions=1

num.recovery.threads.per.data.dir=1

log.retention.hours=48

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

log.cleaner.enable=true

delete.topic.enable=true

auto.create.topics.enable=false

zookeeper.connect=test-adx-zookeeper:2181

zookeeper.connection.timeout.ms=6000

offsets.topic.replication.factor=2

EOF启动镜像

docker run -itd \

--network adx-net \

--name test-adx-kafka \

-v /data/dockerdata/kafka:/data/kafka \

reg.iferox.com/library/cusper-kafka:2.11-1.1.1创建Topics

docker exec test-adx-kafka kafka-topics.sh --zookeeper test-adx-zookeeper:2181 --create --partitions 1 --replication-factor 1 --topic traffic

docker exec test-adx-kafka kafka-topics.sh --zookeeper test-adx-zookeeper:2181 --create --partitions 1 --replication-factor 1 --topic performance

docker exec test-adx-kafka kafka-topics.sh --zookeeper test-adx-zookeeper:2181 --create --partitions 1 --replication-factor 1 --topic re_bid部署Druid

拉取镜像

docker pull reg.iferox.com/library/cusper-druid:0.20.0-cluster-v1-nfs

启动镜像

设置参数

新建/root/workspace/druid/druid-env.txt

mkdir -p /root/workspace/druid/ && cd /root/workspace/druid/

cat > /root/workspace/druid/druid-env.txt <<EOF

ZOOKEEPER_HOST=test-adx-zookeeper:2181

MYSQL_HOST=test-adx-mysql

MYSQL_USER=adxuser

MYSQL_PASSWORD=adx@Pass123!

MYSQL_DB_NAME=druid

MYSQL_CONNECT_URI=jdbc:mysql://test-adx-mysql:3306/druid

NFS_SERVER_HOST=test-adx-nfs

DEEP_STORAGE_TYPE=nfs

EOF启动master

docker run -itd \

--network adx-net \

--name test-adx-druid-master \

--privileged \

--env-file=/root/workspace/druid/druid-env.txt \

-v /data/dockerdata/druid/druid-master:/usr/local/app/druid/var \

reg.iferox.com/library/cusper-druid:0.20.0-cluster-v1-nfs master

启动historical

export num=01 && \

docker run -itd \

--network adx-net \

--name test-adx-druid-historical-$num\

--privileged \

--env-file=/root/workspace/druid/druid-env.txt \

-v /data/dockerdata/druid/druid-historical-$num/var:/usr/local/app/druid/var \

-v /data/dockerdata/druid/druid-historical-$num/segment-cache:/data/druid/segment-cache \

reg.iferox.com/library/cusper-druid:0.20.0-cluster-v1-nfs historical && \

unset num

#上述命令会启动名字为test-adx-druid-historical01的容器,如果要启动第二台historical,可以修改num的值。启动middlemanager

export num=01 && \

docker run -itd \

--network adx-net \

--name test-adx-druid-middlemanager-$num\

--privileged \

--env-file=/root/workspace/druid/druid-env.txt \

-v /data/dockerdata/druid/druid-middlemanager-$num/var:/usr/local/app/druid/var \

reg.iferox.com/library/cusper-druid:0.20.0-cluster-v1-nfs middlemanager && \

unset num

#上述命令会启动名字为test-adx-druid-middlemanager01的容器,如果要启动第二台middlemanager,可以修改num的值。启动query

export num=01 && \

docker run -itd \

--network adx-net \

--name test-adx-druid-query-$num\

--privileged \

--env-file=/root/workspace/druid/druid-env.txt \

-p 8888:8888 \

-v /data/dockerdata/druid/druid-query-$num/var:/usr/local/app/druid/var \

reg.iferox.com/library/cusper-druid:0.20.0-cluster-v1-nfs query && \

unset num

#上述命令会启动名字为test-adx-druid-query-01的容器,如果要启动第二台query,可以修改num的值。检查服务

浏览器访问 http://\${docker-server-ip}:8888

提交druid任务

1, 新建文件 nadx-druid-overall-ingestion-task.json,注意修改"bootstrap.servers"值为kafka容器名,本例中名字为"test-adx-kafka:9092"

{

"type": "kafka",

"dataSchema": {

"dataSource": "nadx_ds_overall",

"parser": {

"type": "string",

"parseSpec": {

"format": "json",

"columns": [

"timestamp",

"supply_bd_id",

"supply_am_id",

"supply_id",

"supply_protocol",

"request_flag",

"ad_format",

"site_app_id",

"placement_id",

"position",

"country",

"region",

"city",

"carrier",

"os",

"os_version",

"device_type",

"device_brand",

"device_model",

"age",

"gender",

"cost_currency",

"demand_bd_id",

"demand_am_id",

"demand_id",

"demand_protocol",

"revenue_currency",

"demand_seat_id",

"demand_campaign_id",

"demand_creative_id",

"target_site_app_id",

"bid_price_model",

"traffic_type",

"currency",

"bundle",

"size",

"supply_request_count",

"supply_invalid_request_count",

"supply_bid_count",

"supply_bid_price_cost_currency",

"supply_bid_price",

"supply_win_count",

"supply_win_price_cost_currency",

"supply_win_price",

"demand_request_count",

"demand_bid_count",

"demand_bid_price_revenue_currency",

"demand_bid_price",

"demand_win_count",

"demand_win_price_revenue_currency",

"demand_win_price",

"demand_timeout_count",

"impression_count",

"impression_cost_currency",

"impression_cost",

"impression_revenue_currency",

"impression_revenue",

"click_count",

"click_cost_currency",

"click_cost",

"click_revenue_currency",

"click_revenue",

"conversion_count",

"conversion_price"

],

"timestampSpec": {

"format": "posix",

"column": "timestamp"

},

"dimensionsSpec": {

"dimensions": [

"supply_bd_id",

"supply_am_id",

"supply_id",

"ad_format",

"site_app_id",

"placement_id",

"bundle",

"size",

"traffic_type",

"country",

"os",

"cost_currency",

"demand_bd_id",

"demand_am_id",

"demand_id",

"demand_seat_id",

"demand_campaign_id",

"demand_creative_id",

"revenue_currency",

"bid_price_model",

"currency"

],

"dimensionExclusions": [

"supply_protocol",

"request_flag",

"position",

"region",

"city",

"carrier",

"os_version",

"device_type",

"device_brand",

"device_model",

"age",

"gender",

"demand_protocol",

"target_site_app_id"

],

"spatialDimensions": []

}

}

},

"metricsSpec": [

{

"fieldName": "supply_request_count",

"name": "supply_request_count",

"type": "longSum"

},

{

"fieldName": "supply_invalid_request_count",

"name": "supply_invalid_request_count",

"type": "longSum"

},

{

"fieldName": "supply_bid_count",

"name": "supply_bid_count",

"type": "longSum"

},

{

"fieldName": "supply_bid_price_cost_currency",

"name": "supply_bid_price_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "supply_bid_price",

"name": "supply_bid_price",

"type": "doubleSum"

},

{

"fieldName": "supply_win_count",

"name": "supply_win_count",

"type": "longSum"

},

{

"fieldName": "supply_win_price_cost_currency",

"name": "supply_win_price_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "supply_win_price",

"name": "supply_win_price",

"type": "doubleSum"

},

{

"fieldName": "demand_request_count",

"name": "demand_request_count",

"type": "longSum"

},

{

"fieldName": "demand_bid_count",

"name": "demand_bid_count",

"type": "longSum"

},

{

"fieldName": "demand_bid_price_revenue_currency",

"name": "demand_bid_price_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "demand_bid_price",

"name": "demand_bid_price",

"type": "doubleSum"

},

{

"fieldName": "demand_win_count",

"name": "demand_win_count",

"type": "longSum"

},

{

"fieldName": "demand_win_price_revenue_currency",

"name": "demand_win_price_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "demand_win_price",

"name": "demand_win_price",

"type": "doubleSum"

},

{

"fieldName": "demand_timeout_count",

"name": "demand_timeout_count",

"type": "longSum"

},

{

"fieldName": "impression_count",

"name": "impression_count",

"type": "longSum"

},

{

"fieldName": "impression_cost_currency",

"name": "impression_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "impression_cost",

"name": "impression_cost",

"type": "doubleSum"

},

{

"fieldName": "impression_revenue_currency",

"name": "impression_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "impression_revenue",

"name": "impression_revenue",

"type": "doubleSum"

},

{

"fieldName": "click_count",

"name": "click_count",

"type": "longSum"

},

{

"fieldName": "click_cost_currency",

"name": "click_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "click_cost",

"name": "click_cost",

"type": "doubleSum"

},

{

"fieldName": "click_revenue_currency",

"name": "click_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "click_revenue",

"name": "click_revenue",

"type": "doubleSum"

},

{

"fieldName": "conversion_count",

"name": "conversion_count",

"type": "longSum"

},

{

"fieldName": "conversion_price",

"name": "conversion_price",

"type": "doubleSum"

}

],

"granularitySpec": {

"type": "uniform",

"rollup" : true,

"segmentGranularity": "DAY",

"queryGranularity": "HOUR"

}

},

"tuningConfig": {

"type": "kafka",

"resetOffsetAutomatically": "true",

"maxRowsPerSegment": 5000000

},

"ioConfig": {

"topic": "traffic",

"useEarliestOffset": "true",

"consumerProperties": {

"bootstrap.servers": "test-adx-kafka:9092"

},

"taskCount": 1,

"replicas": 1,

"taskDuration": "PT1H"

}

}2, 新建文件nadx-druid-details-ingestion-task.json,注意修改"bootstrap.servers"值为kafka容器名,本例中名字为"test-adx-kafka:9092".

文件内容

{

"type": "kafka",

"dataSchema": {

"dataSource": "nadx_ds_main",

"parser": {

"type": "string",

"parseSpec": {

"format": "json",

"columns": [

"timestamp",

"supply_bd_id",

"supply_am_id",

"supply_id",

"supply_protocol",

"request_flag",

"ad_format",

"site_app_id",

"placement_id",

"position",

"country",

"region",

"city",

"carrier",

"os",

"os_version",

"device_type",

"device_brand",

"device_model",

"age",

"gender",

"cost_currency",

"demand_bd_id",

"demand_am_id",

"demand_id",

"demand_protocol",

"revenue_currency",

"demand_seat_id",

"demand_campaign_id",

"demand_creative_id",

"target_site_app_id",

"bid_price_model",

"traffic_type",

"currency",

"bundle",

"size",

"supply_request_count",

"supply_invalid_request_count",

"supply_bid_count",

"supply_bid_price_cost_currency",

"supply_bid_price",

"supply_win_count",

"supply_win_price_cost_currency",

"supply_win_price",

"demand_request_count",

"demand_bid_count",

"demand_bid_price_revenue_currency",

"demand_bid_price",

"demand_win_count",

"demand_win_price_revenue_currency",

"demand_win_price",

"demand_timeout_count",

"impression_count",

"impression_cost_currency",

"impression_cost",

"impression_revenue_currency",

"impression_revenue",

"click_count",

"click_cost_currency",

"click_cost",

"click_revenue_currency",

"click_revenue",

"conversion_count",

"conversion_price"

],

"timestampSpec": {

"format": "posix",

"column": "timestamp"

},

"dimensionsSpec": {

"dimensions": [

"supply_bd_id",

"supply_am_id",

"supply_id",

"supply_protocol",

"request_flag",

"ad_format",

"site_app_id",

"placement_id",

"position",

"country",

"state",

"city",

"carrier",

"os",

"os_version",

"device_type",

"device_brand",

"device_model",

"age",

"gender",

"cost_currency",

"demand_bd_id",

"demand_am_id",

"demand_id",

"demand_protocol",

"revenue_currency",

"demand_seat_id",

"demand_campaign_id",

"demand_creative_id",

"target_site_app_id",

"bid_price_model",

"traffic_type",

"currency",

"bundle",

"size"

],

"dimensionExclusions": [],

"spatialDimensions": []

}

}

},

"metricsSpec": [

{

"fieldName": "supply_request_count",

"name": "supply_request_count",

"type": "longSum"

},

{

"fieldName": "supply_invalid_request_count",

"name": "supply_invalid_request_count",

"type": "longSum"

},

{

"fieldName": "supply_bid_count",

"name": "supply_bid_count",

"type": "longSum"

},

{

"fieldName": "supply_bid_price_cost_currency",

"name": "supply_bid_price_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "supply_bid_price",

"name": "supply_bid_price",

"type": "doubleSum"

},

{

"fieldName": "supply_win_count",

"name": "supply_win_count",

"type": "longSum"

},

{

"fieldName": "supply_win_price_cost_currency",

"name": "supply_win_price_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "supply_win_price",

"name": "supply_win_price",

"type": "doubleSum"

},

{

"fieldName": "demand_request_count",

"name": "demand_request_count",

"type": "longSum"

},

{

"fieldName": "demand_bid_count",

"name": "demand_bid_count",

"type": "longSum"

},

{

"fieldName": "demand_bid_price_revenue_currency",

"name": "demand_bid_price_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "demand_bid_price",

"name": "demand_bid_price",

"type": "doubleSum"

},

{

"fieldName": "demand_win_count",

"name": "demand_win_count",

"type": "longSum"

},

{

"fieldName": "demand_win_price_revenue_currency",

"name": "demand_win_price_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "demand_win_price",

"name": "demand_win_price",

"type": "doubleSum"

},

{

"fieldName": "demand_timeout_count",

"name": "demand_timeout_count",

"type": "longSum"

},

{

"fieldName": "impression_count",

"name": "impression_count",

"type": "longSum"

},

{

"fieldName": "impression_cost_currency",

"name": "impression_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "impression_cost",

"name": "impression_cost",

"type": "doubleSum"

},

{

"fieldName": "impression_revenue_currency",

"name": "impression_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "impression_revenue",

"name": "impression_revenue",

"type": "doubleSum"

},

{

"fieldName": "click_count",

"name": "click_count",

"type": "longSum"

},

{

"fieldName": "click_cost_currency",

"name": "click_cost_currency",

"type": "doubleSum"

},

{

"fieldName": "click_cost",

"name": "click_cost",

"type": "doubleSum"

},

{

"fieldName": "click_revenue_currency",

"name": "click_revenue_currency",

"type": "doubleSum"

},

{

"fieldName": "click_revenue",

"name": "click_revenue",

"type": "doubleSum"

},

{

"fieldName": "conversion_count",

"name": "conversion_count",

"type": "longSum"

},

{

"fieldName": "conversion_price",

"name": "conversion_price",

"type": "doubleSum"

}

],

"granularitySpec": {

"type": "uniform",

"rollup" : true,

"segmentGranularity": "HOUR",

"queryGranularity": "HOUR"

}

},

"tuningConfig": {

"type": "kafka",

"resetOffsetAutomatically": "true",

"maxRowsPerSegment": 5000000

},

"ioConfig": {

"topic": "traffic",

"useEarliestOffset": "true",

"consumerProperties": {

"bootstrap.servers": "test-adx-kafka:9092"

},

"taskCount": 1,

"replicas": 1,

"taskDuration": "PT1H"

}

}3,执行提交

curl -s -X POST -H 'Content-Type: application/json' -d @nadx-druid-overall-ingestion-task.json http://${docker-server-ip}:8888/druid/indexer/v1/supervisor

curl -s -X POST -H 'Content-Type: application/json' -d @nadx-druid-details-ingestion-task.json http://${docker-server-ip}:8888/druid/indexer/v1/supervisor部署Storm

拉取镜像

docker pull reg.iferox.com/nadx/nadx-stream:1.11.6.20230221a创建配置文件

设置环境变量

/root/workspace/storm/storm-env.txt,内容

注意STORM_NIMBUS_SEED 须与storm nimbus容器名称一致

mkdir -p /root/workspace/storm && cd /root/workspace/storm

cat > /root/workspace/storm/storm-env.txt <<EOF

ZOOKEEPER_HOST=test-adx-zookeeper:2181

ZOOKEEPER_KAFKA=test-adx-kafka:9092

MYSQL_HOST=test-adx-mysql

MYSQL_USER=adxuser

MYSQL_PASSWORD=adx@Pass123!

MYSQL_ADX_DB_NAME=nadx

REDIS_EXCHANGE_HOST=test-adx-redis-exchange

REDIS_DATA_HOST=test-adx-redis-data

REDIS_AUTH=redis-user:1234

STORM_NIMBUS_SEED=test-adx-storm-nimbus-01

EOF创建storm配置文件模板

新建/root/workspace/storm/storm.yaml.template文件

mkdir -p /root/workspace/storm && cd /root/workspace/storm

cat > /root/workspace/storm/storm.yaml.template <<EOF

storm.zookeeper.servers:

- "zookeeper_server"

storm.zookeeper.port: 2181

storm.local.hostname: "local_ip"

nimbus.seeds: ["nimbus_server_host"]

nimbus.thrift.max_buffer_size: 1048576

nimbus.childopts: "-Xmx768m"

nimbus.task.timeout.secs: 60

nimbus.supervisor.timeout.secs: 60

nimbus.monitor.freq.secs: 10

nimbus.cleanup.inbox.freq.secs: 600

nimbus.inbox.jar.expiration.secs: 3600

nimbus.task.launch.secs: 240

nimbus.reassign: true

nimbus.file.copy.expiration.secs: 600

storm.log.dir: "/logs"

storm.local.dir: "/data/storm"

storm.zookeeper.root: "/nadxstorm"

storm.cluster.mode: "distributed"

storm.local.mode.zmq: false

ui.port: 8080

ui.childopts: "-Xmx256m"

supervisor.slots.ports:

- 6701

- 6702

- 6703

- 6704

supervisor.enable: true

supervisor.childopts: "-Xmx2048m"

supervisor.worker.start.timeout.secs: 240

supervisor.worker.timeout.secs: 30

supervisor.monitor.frequency.secs: 3

supervisor.heartbeat.frequency.secs: 5

worker.childopts: "-Xmx2048m"

topology.receiver.buffer.size: 8

topology.transfer.buffer.size: 32

topology.executor.receive.buffer.size: 16384

topology.executor.send.buffer.size: 16384

storm.zookeeper.session.timeout: 10000

storm.zookeeper.connection.timeout: 3000

storm.zookeeper.retry.times: 6

storm.zookeeper.retry.interval: 2000

storm.zookeeper.retry.intervalceiling.millis: 30000

storm.messaging.transport: "org.apache.storm.messaging.netty.Context"

storm.messaging.netty.server_worker_threads: 50

storm.messaging.netty.client_worker_threads: 50

storm.messaging.netty.buffer_size: 5242880

storm.messaging.netty.transfer.batch.size: 524288

storm.messaging.netty.max_retries: 300

storm.messaging.netty.max_wait_ms: 2000

storm.messaging.netty.min_wait_ms: 100

EOF创建stream配置文件

/root/workspace/storm/nadx-stream.conf

mkdir -p /root/workspace/storm && cd /root/workspace/storm

cat > /root/workspace/storm/nadx-stream.conf <<EOF

#env

environment = "prod"

#kafka

broker.zk.url = "test-adx-zookeeper:2181"

broker.zk.path = "/brokers"

broker.list = "test-adx-kafka:9092"

topic.traffic.name = "traffic"

topic.traffic.partition = 1

topic.re.bid.name = "re_bid"

topic.re.bid.partition = 1

topic.performance.name = "performance"

topic.performance.partition = 1

#save topology

save.bid.spout.per.partition = 1

#save.bid.spout.fetch.size.bytes = 31457280

save.bid.spout.fetch.size.bytes = 2097152

save.bid.worker.number = 1

save.bid.qps.parallelism = 1

save.bid.data.parallelism = 1

save.bid.spout.from.latest = 0

#re-save bid topology

re.save.bid.spout.per.partition = 1

re.save.bid.spout.fetch.size.bytes = 1048576

re.save.bid.max.round = 10

#performance topology

performance.spout.per.partition = 1

performance.spout.fetch.size.bytes = 1048576

performance.worker.number = 1

performance.parallelism = 1

performance.handler.max.count = 100

performance.window.seconds = 3600

#cron topology

cron.bid.hash.init.interval.seconds = 600

cron.tick.interval.seconds = 60

cron.bid.hash.init.delta.seconds = 60

cron.currency.refresh.interval.seconds = 86400

cron.currency.refresh.url = "https://openexchangerates.org/api/latest.json?app_id=14ddeae2089e419eb1d3c0cd1dac0008"

#data cache

data.cache.host = "test-adx-redis-data"

data.cache.port = 6379

data.cache.password = "redis-user:1234"

data.cache.pool.max.total = 50

data.cache.hmset.batch = 20

#data.cache.win.url.list = "list_nadx_win_url"

data.cache.url.list = "list_nadx_url"

exchange.cache.host = "test-adx-redis-exchange"

exchange.cache.port = 6379

exchange.cache.password = "redis-user:1234"

exchange.cache.pool.max.total = 50

currency.hash = "hash_currency_exchange"

cron.performance.send.minutes = 3

#druid

druid.query.time.out = 1800000

druid.url = "http://test-adx-druid-query:8888/druid/v2/?pretty"

druid.index.server.url = "http://test-adx-druid-query:8888/druid/indexer/v1/task"

druid.data.source = "nadx_ds_main"

druid.migrate.raw.data.dir = "/share/druidRawData"

druid.migrate.raw.data.buffer.byte = 1048576

EOF启动镜像

启动nimbus

export num=01 && \

docker run -itd \

--network adx-net \

--name test-adx-storm-nimbus-$num \

--env-file=/root/workspace/storm/storm-env.txt \

-v /root/workspace/storm/nadx-stream.conf:/opt/nadx/stream/conf/nadx-stream.conf \

-v /root/workspace/storm/storm.yaml.template:/conf/storm.yaml.template \

-v /data/dockerdata/storm/nimubs-$num-data:/data \

-v /data/dockerdata/storm/nimubs-$num-data/logs:/logs \

reg.iferox.com/nadx/nadx-stream:1.11.6.20230221a ./start.sh nimbus && \

unset num启动supervisor

export num=01 && \

docker run -itd \

--network adx-net \

--name test-adx-storm-supervisor-$num \

--env-file=/root/workspace/storm/storm-env.txt \

-v /root/workspace/storm/nadx-stream.conf:/opt/nadx/stream/conf/nadx-stream.conf \

-v /root/workspace/storm/storm.yaml.template:/conf/storm.yaml.template \

-v /data/dockerdata/storm/supervisor-$num-data:/data \

-v /data/dockerdata/storm/supervisor-$num-data/logs:/logs \

reg.iferox.com/nadx/nadx-stream:1.11.6.20230221a ./start.sh supervisor && \

unset num提交stream任务

docker exec test-adx-storm-nimbus-01 ./start.sh stream检查stream topology

docker exec test-adx-storm-nimbus-01 storm list停止stream topology命令参考

此步骤不需要执行,仅重新提交任务时使用

docker exec test-adx-storm-nimbus-01 storm kill topology_cron topology_save_bid topology_performance部署ADX应用

启动Service服务

创建配置文件

新建/root/workspace/service/application-dev.yml

mkdir -p /root/workspace/service && cd /root/workspace/service

cat > /root/workspace/service/application-dev.yml <<EOF

spring:

datasource:

url: jdbc:mysql://test-adx-mysql:3306/nadx?useUnicode=true&useSSL=false&autoReconnect=false&rewriteBatchedStatements=true

driver-class-name: com.mysql.cj.jdbc.Driver

username: adxuser

password: adx@Pass123!

# 使用druid数据源

type: com.alibaba.druid.pool.DruidDataSource

# 下面为连接池的补充设置,应用到上面所有数据源中

# 初始化大小,最小,最大

druid:

initialSize: 5

minIdle: 5

maxActive: 20

# 配置获取连接等待超时的时间

maxWait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

timeBetweenEvictionRunsMillis: 60000

# 配置一个连接在池中最小生存的时间,单位是毫秒

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1 FROM DUAL

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# 打开PSCache,并且指定每个连接上PSCache的大小

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 20

# 配置监控统计拦截的filters,去掉后监控界面sql无法统计,'wall'用于防火墙

filters: stat,log4j,config

# 通过connectProperties属性来打开mergeSql功能;慢SQL记录

connectionProperties: druid.stat.mergeSql=true;druid.stat.slowSqlMillis=5000;

redis:

# Redis数据库索引(默认为0)

database: 0

# Redis服务器地址

host: test-adx-redis-exchange

# Redis服务器连接端口

port: 6379

# Redis服务器连接密码(默认为空)

password: redis-user:1234

pool:

max-wait: 1

max-idle: 8

max-active: 8

min-idle: 0

timeout: 0

session:

store-type: redis

mvc:

dispatch-options-request: true

server:

tomcat:

basedir: /tmp

logging:

path: /data/logs/nadx-service

level:

root: "INFO"

druid:

queryDruidUrl: "http://test-adx-druid-query-01:8888/druid/v2/?pretty"

dataSource: "nadx_ds_main"

druidQueryMaxLimit: 500000

druidQueryTimeOut: 180000

api:

lockKeyExpireTime: 10

apiKey: "1234"

# 此路径与前端资源共享

report:

downloadPath: "/data/report"

downloadUrl: "http://adx.abc.com"

downloadExpireTime: 3600

demandTestUrl: "http://exchange.abc.com/demand/test"

sellersJsonPath: "/data/sellers"

httpSessionTimeOutSecond: 86400

EOF拉取镜像

docker pull reg.iferox.com/nadx/nadx-service:0.16.6.20230220a.bj运行Service服务

docker run -itd \

--network adx-net \

--name test-adx-service \

-p 9201:9201 \

-v /root/workspace/service/application-dev.yml:/opt/nadx/service/application-dev.yml \

-v /root/workspace/hosts:/etc/hosts \

-v /data/dockerdata/service-data/logs:/data/logs \

-v /data/dockerdata/service-data/sellers:/data/sellers \

-v /data/dockerdata/service-data/report:/data/report \

reg.iferox.com/nadx/nadx-service:0.16.6.20230220a.bj启动Front服务

创建配置文件

创建front-nginx配置文件

新建nadx-front-nginx.conf,注意修改域名配置项。

mkdir -p /root/workspace/front && cd /root/workspace/front

cat > /root/workspace/front/nadx-front-nginx.conf <<EOF

server {

listen 9202 default_server;

server_name adx.abc.com;

location ~ /sellers {

root /data/sellers;

}

location /data/report/ {

alias /data/report/;

}

location / {

add_header Cache-Control "no-cache, no-store";

root /opt/nadx-front/dist;

index index.html index.htm;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

EOFFront应用配置文件

新建config.json,注意修改域名配置项

mkdir -p /root/workspace/front && cd /root/workspace/front

cat > /root/workspace/front/config.js <<EOF

window.g = {

"BID_DOMAIN": "exchange.abc.com",

"BASE_API": "http://adxservice.abc.com/",

"MAX_DAY_WITH_HOUR": 7,

"NEED_REPORT_DETAIL": 1,

"FN_ADX_BID_PRICE": 0,

"PAGE_TITLE": "Cusper Demo",

"LOGIN_LOGO": "",

"SITE_FAVICON": "//demo.leadsmatic.net/static/images/favicon.ico"

}

EOF拉取镜像

docker pull reg.iferox.com/nadx/nadx-front:2.7.11.20230224a运行Front服务

docker run -itd \

--network adx-net \

--name test-adx-front \

-p 9202:9202 \

-v /root/workspace/front/nadx-front-nginx.conf:/etc/nginx/http.d/nadx-front-nginx.conf \

-v /root/workspace/front/config.js:/opt/nadx-front/dist/static/config.js \

-v /data/dockerdata/service-data/sellers:/data/sellers \

-v /data/dockerdata/service-data/report:/data/report \

-v /data/dockerdata/front/log:/var/log/nginx \

-v /root/workspace/hosts:/etc/hosts \

reg.iferox.com/nadx/nadx-front:2.7.11.20230224a启动Nginx proxy服务

创建配置文件

创建exchange nginx配置文件

mkdir -p /root/workspace/nginx/ && cd /root/workspace/nginx/

cat > nadx-exchange-nginx.conf <<EOF

server {

listen 80;

server_name exchange.abc.com;

error_log /var/log/nginx/adx-exchange-error.log debug;

access_log /var/log/nginx/adx-exchange-access.log main;

location / {

51D_match_all x-device DeviceType,HardwareVendor,HardwareModel,PlatformName,PlatformVersion,BrowserName,BrowserVersion,IsCrawler;

proxy_pass http://test-adx-exchange:9200;

proxy_next_upstream off;

proxy_redirect off;

proxy_buffering off;

set_real_ip_from 10.0.0.0/8;

set_real_ip_from 172.16.0.0/12;

set_real_ip_from 192.168.0.0/16;

real_ip_header X-Forwarded-For;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

proxy_set_header COUNTRY \$geoip2_data_country_code;

proxy_set_header REGION \$geoip2_data_region_code;

proxy_set_header CITY \$geoip2_data_city_name;

proxy_set_header ISP \$geoip2_data_isp;

proxy_set_header x-device \$http_x_device;

}

location ~ /\.ht {

deny all;

}

}

EOF创建service nginx配置文件

mkdir -p /root/workspace/nginx/ && cd /root/workspace/nginx/

cat > nadx-service-nginx.conf <<EOF

server {

listen 80;

server_name adxservice.abc.com;

error_log /var/log/nginx/adx-service-error.log debug;

access_log /var/log/nginx/adx-service-access.log main;

location / {

proxy_pass http://test-adx-service:9201;

proxy_connect_timeout 300s;

proxy_read_timeout 300s;

proxy_send_timeout 300s;

proxy_next_upstream off;

proxy_redirect off;

proxy_buffering off;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

}

location ~ /\.ht {

deny all;

}

}

EOF创建Front nginx配置文件

mkdir -p /root/workspace/nginx/ && cd /root/workspace/nginx/

cat > nadx-front-nginx.conf <<EOF

server {

listen 80;

server_name adx.abc.com;

error_log /var/log/nginx/adx-front-error.log debug;

access_log /var/log/nginx/adx-front-access.log main;

location / {

proxy_pass http://test-adx-front:9202;

proxy_connect_timeout 300s;

proxy_read_timeout 300s;

proxy_send_timeout 300s;

proxy_next_upstream off;

proxy_redirect off;

proxy_buffering off;

proxy_set_header Host \$host;

proxy_set_header X-Real-IP \$remote_addr;

proxy_set_header X-Forwarded-For \$proxy_add_x_forwarded_for;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

EOF拉取镜像

docker pull reg.iferox.com/library/cusper-nginx:1.0.1运行Nginx服务

docker run -itd \

--network adx-net \

--name test-adx-nginx \

-p 80:80 -p 443:443 \

-v /root/workspace/nginx/nadx-front-nginx.conf:/etc/nginx/conf.d/nadx-front-nginx.conf \

-v /root/workspace/nginx/nadx-service-nginx.conf:/etc/nginx/conf.d/nadx-service-nginx.conf \

-v /root/workspace/nginx/nadx-exchange-nginx.conf:/etc/nginx/conf.d/nadx-exchange-nginx.conf \

-v /root/workspace/hosts:/etc/hosts \

-v /data/dockerdata/nginx/log:/var/log/nginx \

reg.iferox.com/library/cusper-nginx:1.0.1访问验证

页面设置

获取License

请您发送邮件到wangwei\@iferox.com,我们会尽快生成License并邮件通知到您

邮件中内容:

表明您来意

贵公司名称

用于ADX的三个域名(Front domian, Service domain, Exchange domain)

登录系统

登录地址:http://adx.abc.com

初始账号:admin@nadx.com

初始密码:nadx\@123

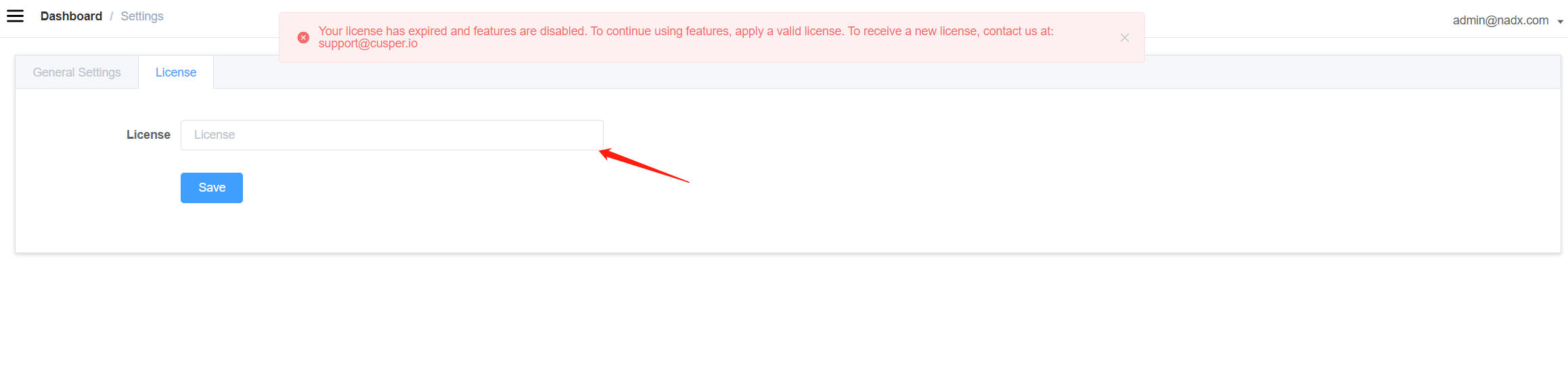

设置License

将第一步获取的License Code填入下图位置并保存

监控搭建

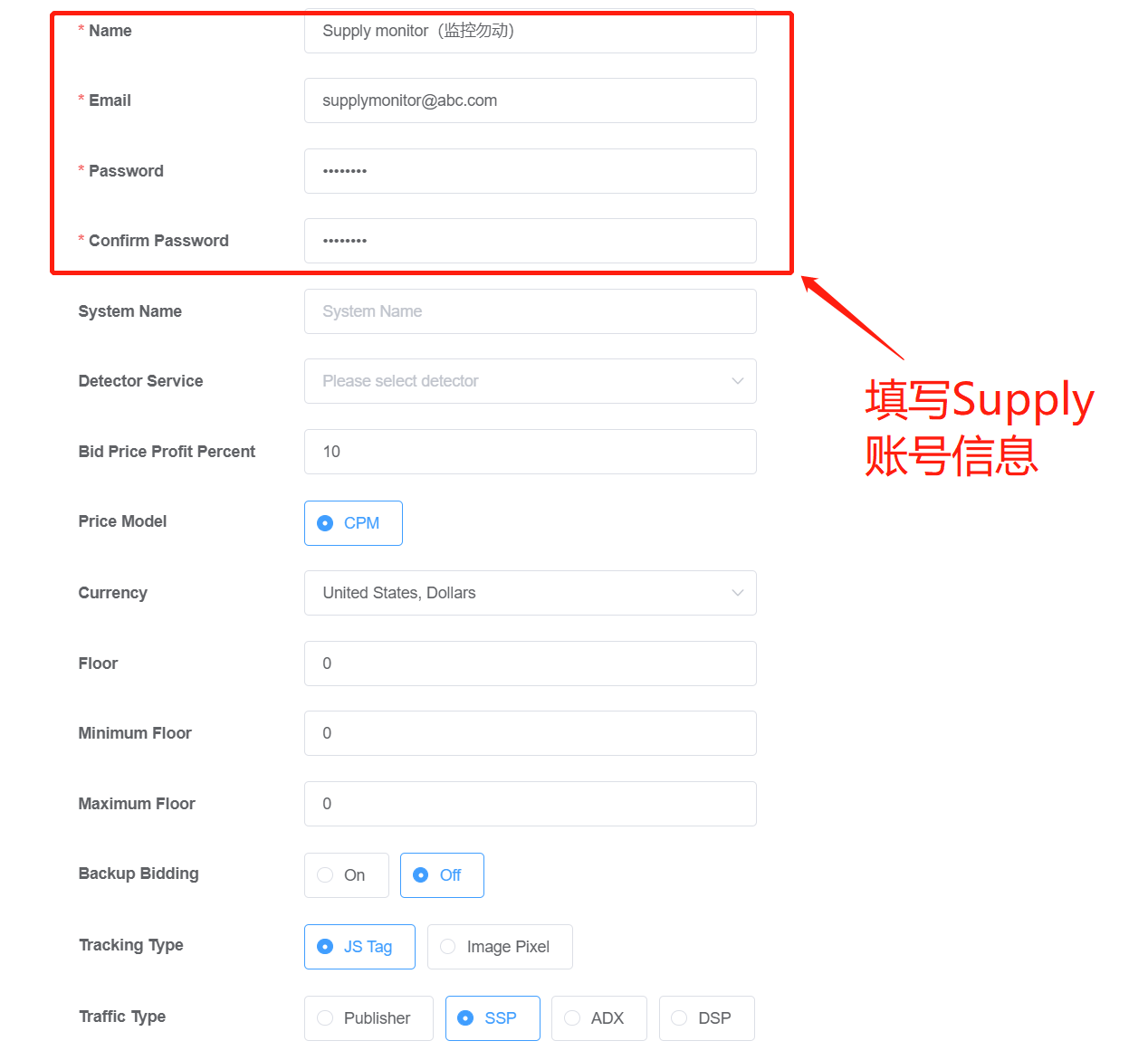

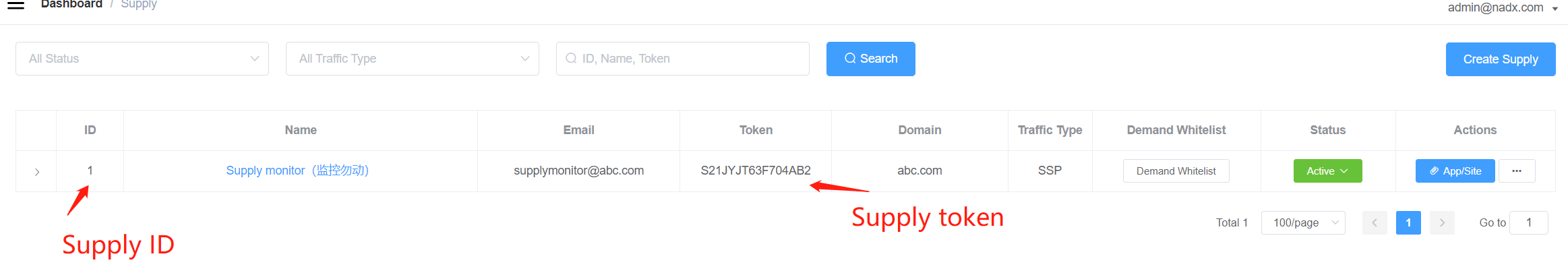

创建Supply账号

创建Supply账号,并记录Supply ID和Supply Token, 如下图

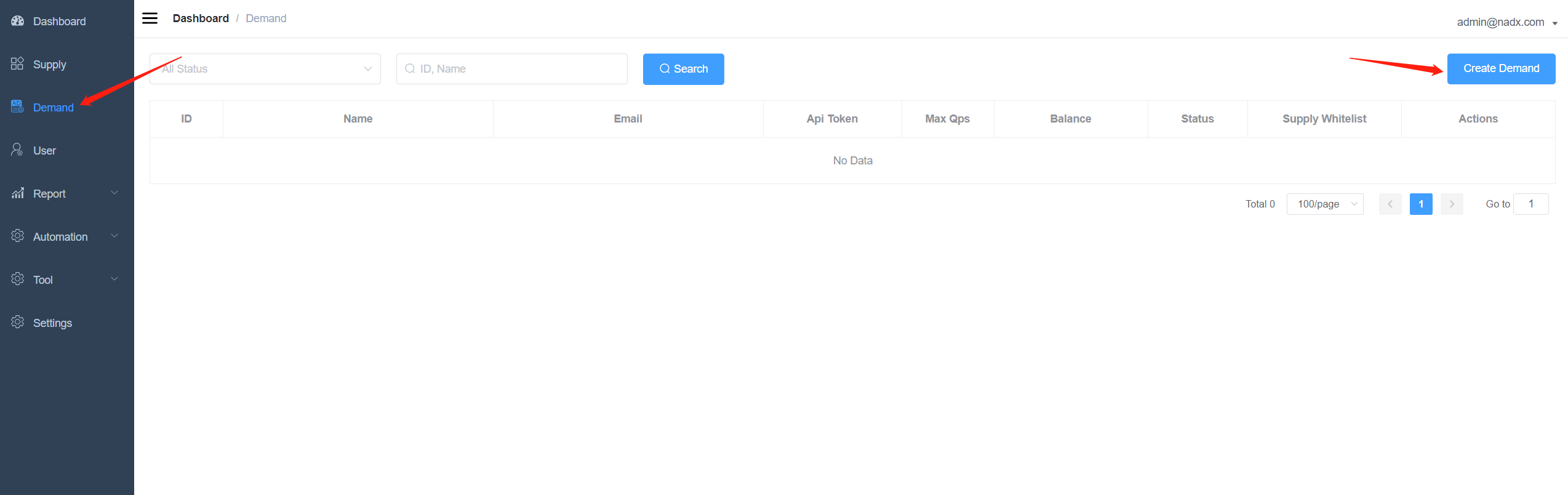

创建Demand账号

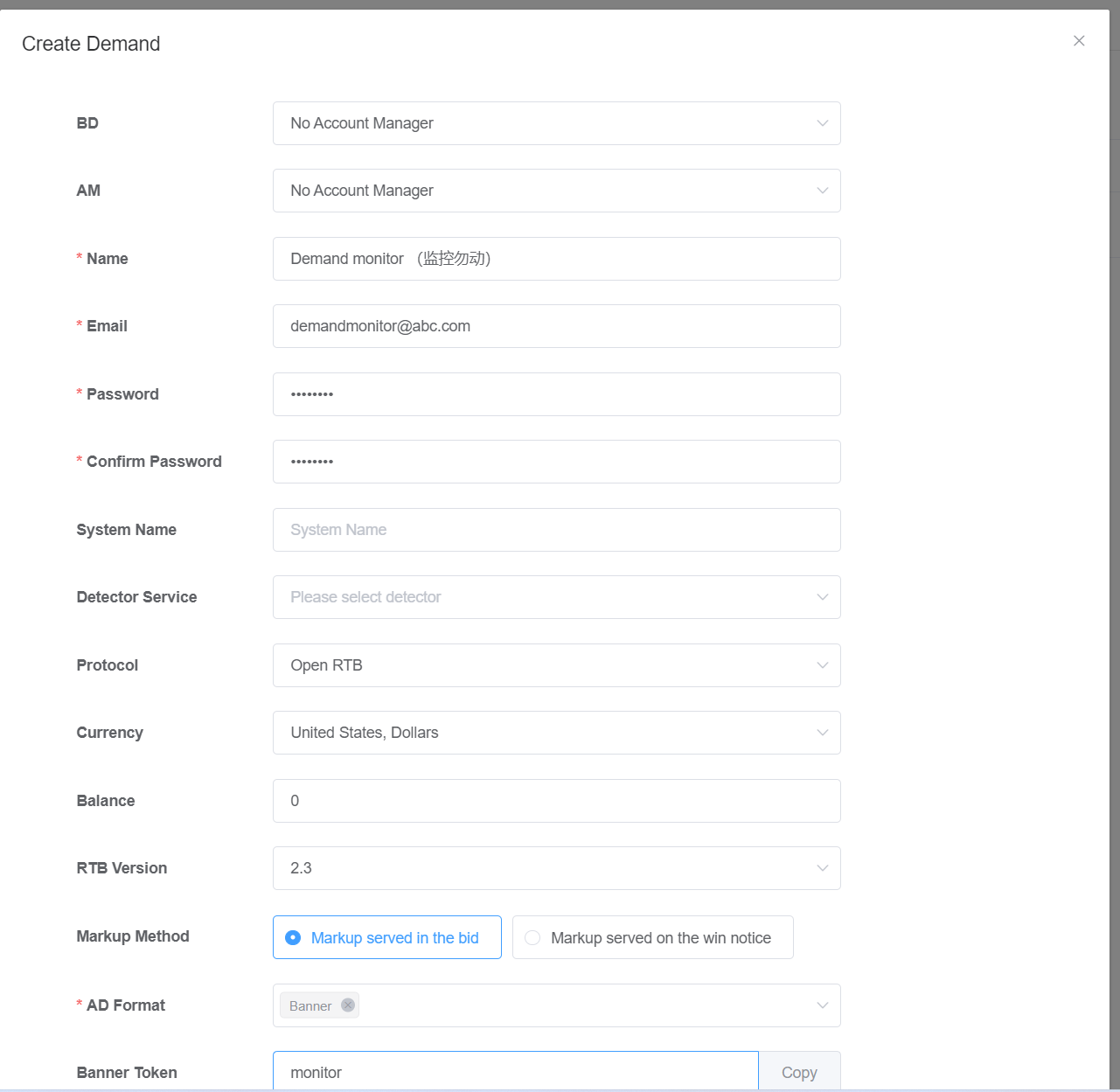

创建Demand账号,并记录Demand ID: 如下图,将必填字段填充完整,其他字段用默认就可以。

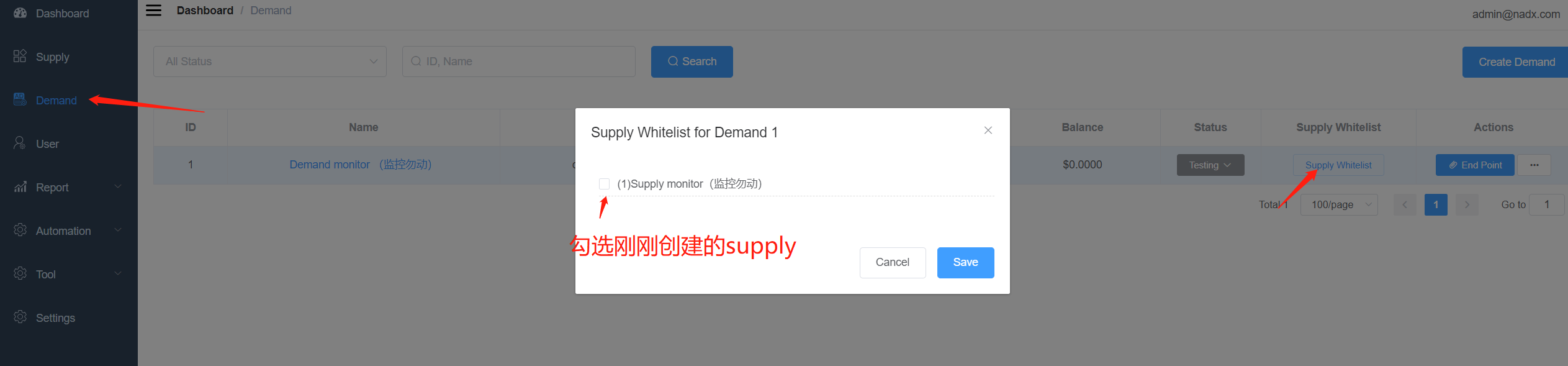

关联Supply和Demand

关联Supply和Demand并设置Demand状态

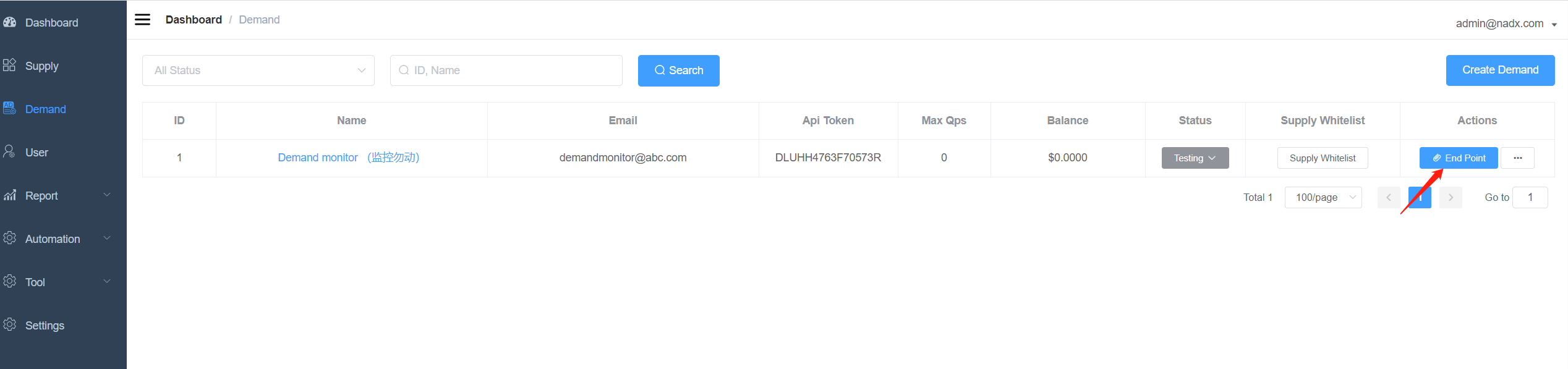

为Demand新建End Point

修改内容如下:

URL: http://${exchange_domian}/dsp/test, 本例中为exchange.abc.com

Method: 选POST

Dc Latency(ms): 为超时时间,毫秒级别,设置为1800

操作如下图

修改各应用配置文件

修改Exchange配置文件

根据前面记录的Supply token, supply id, demand id去更改如下配置文件中的配置项

/root/workspace/exchange/nadx-exchange.json

"stub.supply.token":"${SUPPLY_TOKEN}",

"stub.demand.id": ${DEMAND_ID},

修改完,重新启动Exchange服务修改Monitor配置文件

/root/workspace/monitor/application.conf

supply-id =${SUPPLY_ID}

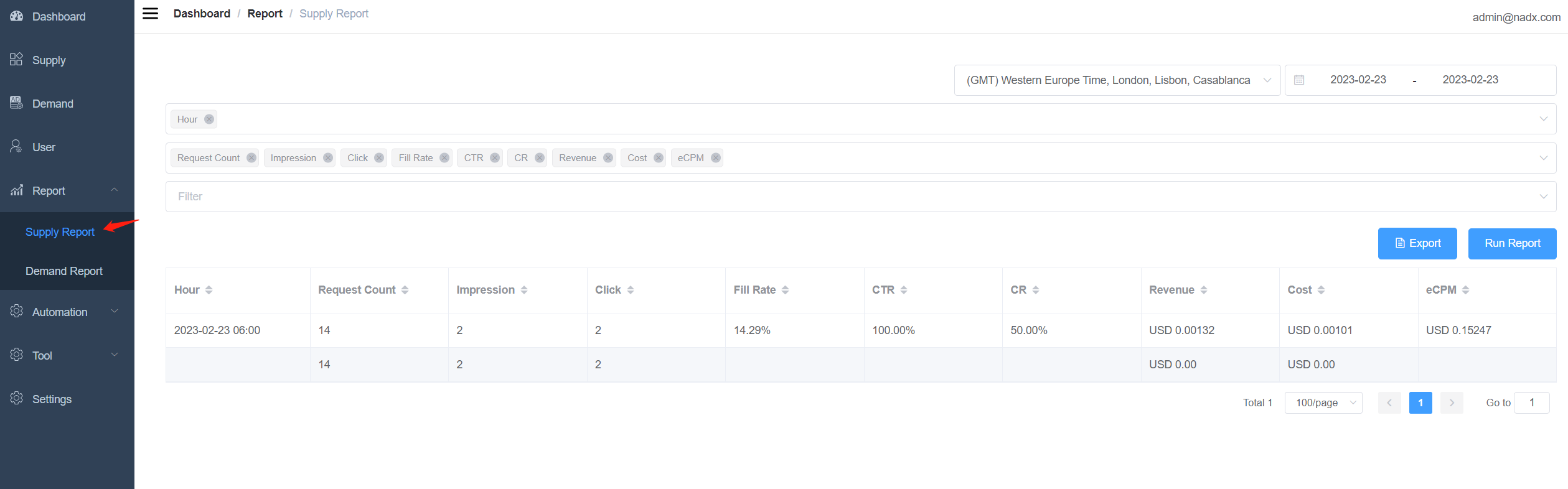

修改完,重新启动Monitor服务验证监控数据

查看Report, 验证是否有监控数据

恭喜,当看到Request Count, Impression, Click有数据表示您已经部署成功并且所有服务运行正常。

监控心跳每分钟一次,后续可以查看每小时监控数据来作为系统是否有问题的依据。启动ADX交易服务

启动Exchange

创建配置文件

创建exchange配置文件

新建/root/workspace/exchange/nadx-exchange.json

根据"页面设置"步骤记录的Supply token, supply id, demand id去更改如下配置文件中的配置项

"stub.supply.token":"${SUPPLY_TOKEN}",

"stub.demand.id": ${DEMAND_ID},mkdir -p /root/workspace/exchange && cd /root/workspace/exchange

cat > /root/workspace/exchange/nadx-exchange.json <<EOF

{

"app.env":"prod",

"app.http.port" : 9200,

"app.server.node" : 100011,

"app.server.region" : "us-east",

"app.system.name":"stageadx",

"app.server.protocol" : "http",

"app.server.domain" : "exchange.abc.com",

"app.client.latency.ms" : 20,

"app.notify.max.second" : 3600,

"ip.trust" : "127.0.0.1,192.168.0.0/16,172.16.0.0/12,10.0.0.0/8",

"rtb.router.max.connections" : 5,

"bid.connection.pool.size":50,

"bid.pipeline.size" : 1,

"http.server.instance" : 64,

"http.client.instance" : 1280,

"http.server.idle.seconds" : 5,

"http.client.idle.seconds" : 1,

"event.bus.timeout.ms" : 2000,

"stub.supply.token":"S4XW55M63ED9D50Z",

"stub.demand.id":1,

"header.country.key" : "COUNTRY",

"header.region.key" : "REGION",

"header.city.key" : "CITY",

"header.carrier.key" : "ISP",

"header.device.key" : "x-device",

"exchange.cache.host" : "test-adx-redis-exchange",

"exchange.cache.port" : 6379,

"exchange.cache.password" : "redis-user:1234",

"exchange.cache.pool.max.total" : 3000,

"currency.hash" : "hash_currency_exchange",

"ip.geo.city.mmdb.file" : "/opt/nadx/exchange/GeoIP2-City.mmdb",

"countries.csv.file" : "/opt/nadx/exchange/conf/countries.csv",

"banner.image.file" : "/opt/nadx/exchange/conf/banner-image-segment-min.html",

"cdn.server.domain" : "adx.abc.com",

"test.response.banner.file":"/opt/nadx/exchange/conf/bid-banner-response.json",

"test.response.native.file":"/opt/nadx/exchange/conf/bid-native-response.json",

"test.response.video.file":"/opt/nadx/exchange/conf/bid-video-response.json",

"log.rolling.interval": "hour",

"log.max.file.size.mb": 100,

"data.path" : "/data/nadx-exchange",

"log.path" : "/data/logs/nadx-exchange",

"log.level" : "DEBUG"

}

EOF创建filebeat配置文件

新建/root/workspace/filebeat.yml

mkdir -p /root/workspace/exchange && cd /root/workspace/exchange

cat > /root/workspace/exchange/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /data/nadx-exchange/nadx-traffic.dat

fields:

log_topics: traffic

- type: log

enabled: true

paths:

- /data/nadx-exchange/nadx-performance.dat

fields:

log_topics: performance

output.kafka:

enabled: true

codec.format.string: '%{[message]}'

hosts: ["test-adx-kafka:9092"]

topic: '%{[fields][log_topics]}'

required_acks: 1

compression: gzip

compression_level: 9

worker: 2

keep_alive: 60

max_message_bytes: 1000000

reachable_only: true

EOF拉取镜像

docker pull reg.iferox.com/nadx/nadx-exchange:1.52.5.20230208a.bj运行Exchange服务

docker run -itd \

--network adx-net \

--name test-adx-exchange \

-p 9200:9200 \

-v /root/workspace/exchange/nadx-exchange.json:/opt/nadx/exchange/conf/nadx-exchange.json \

-v /root/workspace/exchange/filebeat.yml:/opt/filebeat/filebeat.yml \

-v /root/workspace/hosts:/etc/hosts \

-v /data/dockerdata/exchange-data:/data \

reg.iferox.com/nadx/nadx-exchange:1.52.5.20230208a.bj

检查Exchange服务

docker exec test-adx-exchange curl -s http://127.0.0.1:9200启动Monitor服务

创建配置文件

新建/root/workspace/monitor/application.conf

根据"页面设置"步骤记录的Supply token, supply id, demand id去更改如下配置文件中的配置项

supply-id = "${SUPPLY_ID}"

supply-token = "${SUPPLY_TOKEN}"mkdir -p /root/workspace/monitor/ && cd /root/workspace/monitor/

cat > /root/workspace/monitor/application.conf <<EOF

akka {

actor {

provider = "cluster"

}

remote {

log-remote-lifecycle-events = off

netty.tcp {

hostname = "127.0.0.1"

port = 2551

send-buffer-size = 256000b

receive-buffer-size = 256000b

maximum-frame-size = 128000b

}

}

cluster {

seed-nodes = [

"akka.tcp://nadx-monitor@127.0.0.1:2551"

]

metrics {

native-library-extract-folder = "/opt"

}

}

extendsion = [

"akka.cluster.metrics.ClusterMetricsExtension",

"akka.cluster.client.ClusterClientReceptionist"]

loggers = ["akka.event.slf4j.Slf4jLogger"]

loglevel = "INFO"

}

nadx {

name = "nadx-monitor"

log-file = "/data/logs/nadx_monitor.log"

druid-api-host = "http://adxservice.abc.com/report/overallApi"

request.sleep.time.ms = 10000

druid-api-limit-time-ms = 10000

supply-id = 1

supply-token = "S4XW55M63ED9D50Z"

delay-round = 3

monitor-interval = 120

transaction-system-monitor-enable = 1

system-file-path-separator = "/",

tracking = [

{

"name": "singapore",

"winNotificationHost": "http://exchange.abc.com/ad/win?auction_bid_id=",

"impressionHost": "http://exchange.abc.com/ad/impression?auction_bid_id=",

"clickHost": "http://exchange.abc.com/ad/click?auction_bid_id=",

"conversionHost": "http://exchange.abc.com/ad/conversion?auction_bid_id=",

"bidderHost": "http://exchange.abc.com/ad/bid"

}

]

}

EOF拉取镜像

docker pull reg.iferox.com/nadx/nadx-monitor:0.2.2.20221014c.bj运行服务

docker run -itd \

--network adx-net \

--name test-adx-monitor \

-v /root/workspace/monitor/application.conf:/opt/nadx/monitor/conf/application.conf \

-v /root/workspace/hosts:/etc/hosts \

-v /data/dockerdata/monitor/logs:/data/logs \

reg.iferox.com/nadx/nadx-monitor:0.2.2.20221014c.bj验证监控数据

查看Report, 验证是否有监控数据

恭喜,当看到Request Count, Impression, Click有数据表示您已经部署成功并且所有服务运行正常。

监控心跳每分钟一次,后续可以查看每小时监控数据来作为系统是否有问题的依据。

Comments are closed.